Approaching the world’s environmental problems through the Second Law (Entropy Law) of Thermodynamics

Contents

- 1 Introduction

- 2 General environmental trends

- 3 The concept of entropy

- 4 How entropy plays a role in the economic process and re-defines concepts such as efficiency and sustainability

- 5 A plea for a redefinition of efficiency and sustainability

- 6 Transformation of terrestrial resources from available to non-available

- 7 Summary of entropy and economy

- 8 Further Reading

Introduction

Humanity has received many warning signs about economic growth (Approaching the worlds environmental problems through the Second Law (Entropy Law) of Thermodynamics) and the associated negative impact on the environment. Among the first monitors was Thomas Robert Malthus, who in 1798 published “An Essay on the Principles of Population.” In that essay, he predicted that world population growth would outpace economic growth, which would be constrained by the limited amount of arable land. Consequently, he predicted this imbalance would lead to social misery. Nearly two centuries later, economist Nicolas Georgescu-Roegen in 1971 pointed out that economic processes, as generally assumed, are not cyclical at all, and will in the long run lead to exhaustion of the world’s natural resources. Then in 1972, the Club of Rome published the shocking report, The Limits to Growth, followed by another well known book in 1989 by Jeremy Rifkin, Entropy Into the Greenhouse World. In this article, we will show how the Second Law impacts the economic processes that affect our environment. Although in the last few years, ecological economists have paid increasing attention to the true impact of entropy on economics, the concept remains controversial within the mainstream economic community.

General environmental trends

Over the last 50 years or so, awareness of the impact of human actions on the environment has increased. For at least 200 years, humanity has created large-scale economic activities in order to meet market demands. The overarching Western economic model is that of continuous growth, predicated on the idea that growth will come automatically from technological “progress.” There are many reasons or justifications for this glorification of growth, which perhaps is an inherent property of the economic process. For instance, some believe that growth is needed to eradicate poverty and maintain full employment. Some governments use economic growth to prevent their repressive political systems from being undermined. Others believe growth is the only way to keep long-term profits up, since in a steady state they tend to decline. Whatever the merits of these beliefs, however, it’s a fact that human economies impact the environment, and entropy is one method to describe that impact. But first, let’s consider a few facts about our economic system.

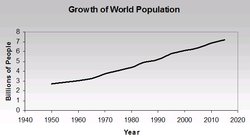

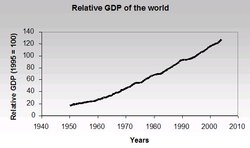

Undoubtedly, one of the most important factors that determines world economic activity is population. Figure 1 shows the growth of world population over time. Global population increased dramatically from 2.3 billion in 1950 to 5.7 billion in 1995. From there, depending on which growth scenario (low, medium, or high) is accepted, world population goes to as high as 11.2 billion people in 2050. With the proliferation of economic growth in developing parts of the world, one would expect that global economic activity also will increase greatly, as illustrated in Figure 2. The world’s economic output increased about threefold from 1970 through 2002, while world population grew approximately 50% in that same period. Such increased economic activity obviously needs natural resources such as energy (mainly from fossil fuels) and materials (for example, wood, iron, and copper). It should be noted here that natural resources can be characterized as renewable and non-renewable. Renewable resources, such as solar, wind, and hydro energy, are renewable in the sense that future generations and we theoretically have continuous access to them, although output at any one time is limited. Non-renewable resources include all fossil fuels and natural materials, such as minerals and the use of metals that are not recycled. The use of natural resources that are not recycled (such as using fossil fuels) are irreplaceable, and what we consume today will simply not be available for future generations.

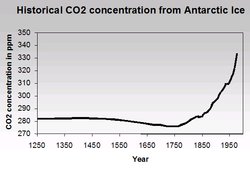

Apart from the need for natural resources (discussed later in this article), economic processes also bring waste production. One of the most intensely discussed topics in this area is air pollution (Air pollution emissions), notably the impact of greenhouse gases on global warming, the destruction of the ozone layer, and the phenomenon of acid rain. Greenhouse gases are relatively transparent to sunlight (Solar radiation) that strike the uppoer atmosphere, but are generally opaque to the thermal radiation energy that is re-radiated and emitted from the earth’s surface. Thus, greenhouse gases lead to a net energy gain on the earth surface. These gases include carbon dioxide (CO2), chlorofluorocarbons (CFCs, CFCl3), methane (CH4), and nitrous oxide (NO2). From concentration trend graphs, it is undeniable that air pollution is present today – and in fact has been with us for centuries! In Figure 3, we can see how the atmospheric concentration of CO2, produced from the combustion of fossil fuels, has increased over the years. Before 1750 the CO2 concentration hovers around 280 parts per million (ppm) but after 1800, we see a sharp increase, which of course coincides with the beginning of the Industrial Revolution and its associated use of wood and coal as fuel.

The increase of CO2 (and of CFCs since about 1960) has been linked to global warming (also called the greenhouse effect), as is again clearly described in the Fourth Action Report of the Intergovernmental Panel on Climate Change (IPCC). Nevertheless, global warming is expected to raise average temperatures between 2 and 40 °C over the next 100 years, with ominous consequences – rising ocean levels, for example.

Meanwhile, CFCs are degrading the ozone layer in the stratosphere at heights between 12 and 70 kilometers (km). The ozone layer, a natural protector of life on earth which acts as a barrier to the extreme level of ultraviolet (UV) light from the sun, is created when oxygen in the upper atmosphere (Atmosphere layers) (about 150 km high) absorbs energy from sunlight (Solar radiation), which then breaks the oxygen molecules (O2) apart into single, reactive atoms. These atoms then recombine with oxygen molecules to form ozone (O3). Ozone absorbs ultraviolet (UV) radiation from the sun and so protects plants and animals from an overdose of UV. CFCs are used in refrigerator systems in cars, homes, and industry. These chemicals eventually break apart, releasing chlorine that reacts with ozone to break it up. The result is that since about 1985, an increasing “hole” has developed in the ozone layer. Although measures have been taken to ban CFCs and replace them with less harmful agents as defined in the Montreal Protocol, it must be noted that CFCs can persist in the atmosphere for up to 400 years. Therefore, even a strict ban on CFCs cannot immediately reverse the damage that is now occurring to the ozone layer. Recent reports indicate that some areas of the ozone layer outside of Antarctica have stablized, and thus the benefits of enacting the Montreal Protocol may be beginning to be seen.

A group that has definitely put the environmental issue on the political agenda is the Club of Rome. Aurelio Peccei, an Italian industrialist, and Alexander King, a Scottish scientist, felt that something needed to be done to protect the earth’s environment from industrial destruction. In 1968, they called for an international meeting of 36 European scientists in Rome. In 1972, this group published a report, The Limits to Growth, which described the relationship between economic growth and damage to the environment. This report immediately had a tremendous impact on the public and political discussion of environmental issues (or perhaps it finally started a much-needed broad, environmental discussion in society) . The group developed a dynamic global model in which five major trends were incorporated: increase in industrial activity, escalating growth of world population, depletion of natural resources, extensive malnutrition, and finally, a fading environment. The model’s shocking conclusion was that, if nothing were done, exhausted natural resources would cause a global collapse well before the year 2100. Also, different economic scenarios and their impacts on resources were included in the report. The grim outlook was that even if we could add resources or implement new technologies, the collapse could not be avoided. This happens because the fundamental problem is an exponential growth in a finite and complex system. The group proposed a way out of this situation by going to a stable state where industrial output per capita is frozen at the 1975 level, and global population growth ceases. Today, the Club of Rome continues to exist, has members from 52 different nations, and still issues reports on environmental matters.

A slightly different way to put the ecological challenge in perspective is by introducing the concept of carrying capacity. Carrying capacity is the maximum persistent load that a given environment can support for a certain purpose (for instance, to continue human civilization). Human civilization needs energy, minerals, wood, food, etc. The carrying capacity can be calculated in terms of how much land area is needed to support our civilization. The resulting calculations of Reese and his co-workers yielded remarkable results. For instance, in 1995 there were 1.5 hectares of arable land per capita available if one takes into account the total available productive land on earth. However, the citizens of Vancouver, BC in 1991 for example needed about 4.2 hectares per capita to keep up with their consumption – which means that the city’s 472,000 inhabitants required a total of 1.99 million hectares of land, compared to the urban area of only 11,400 hectares. An even more dramatic example is found in the Netherlands. Because of its large population density, this country needs to draw resources from an area of land that is 15 times larger than the entire country. Looking at the situation globally, for the rest of the world’s population to live at the same level as North Americans do today, humanity would need another habitable planet at least as big as earth in order to support its overall lifestyle.

The considerations above are only meant to set the scene for the discussion in the rest of this article. Many more details about ecology can be found in a vast array of literature, but that exploration is beyond the scope of this article. However, the picture is clear: economic activity does impact our environment. In the following pages, we will discover how the concept of entropy can help us in understanding the interaction between the economic process and the depletion of terrestrial resources, as well as mounting pollution.

The concept of entropy

Before we continue we must introduce some background knowledge about the [[Second Law] of Thermodynamics] because as we will see it is this law that plays a decisive role in how to approach our environmental problems.

Entropy (S), a concept from thermodynamics, can be calculated from the amount of heat exchanged (Q) divided by the temperature (T) at which that heat exchange occurred (S = Q/T). Rudolf Clausius introduced this definition around 1865. His motivation was that something was missing as the First Law of Thermodynamics (conservation of energy) could not explain why heat flows always from hot to cold regions and that the opposite was never observed. While he studied the properties of this new concept he came to the conclusion that in an isolated system (a system that cannot exchange energy or material with its environment) the entropy always increases for processes that change the energy distribution within that system. This proved to an important conclusion as we are about to find out.

As mentioned before, in all the processes where we say that we are consuming energy, the only thing we are really doing is that we are transforming or redistributing energy. An example of energy transformation is when we are burning fossil fuels. We transform chemical energy stored in the chemical bonds between the atoms of the fuel into heat. That heat can then be put to a useful purpose, such as to power a car, and in doing that we transform (part of ) the heat into work. The net result of this process is that the fuel is completely gone (except for some ashes or soot) and is transformed into gaseous products (such as CO2 and water), and that we have traveled over a certain distance (that is where we used the work obtained from the heat for) and that the remaining heat that we could not convert into work is diffused into the air. Now, the question arises whether we could come up with a clever idea to get the energy back in its initial condition because then we could use it another time to power our car. If we would have to deal only with the First Law, then in principle this would be achievable since we have not “consumed” the energy, we have only transformed it into other forms (work and heat) and have distributed the energy into a larger volume (it was first contained in a condensed volume of fuel but is at the end all over the place). Unfortunately we know from experience that this idea has not yet materialized. In fact if we would be able to do this we would invent a sort of perpetual engine. So what is going on, why can we not do this?

Here it is that the Second Law or entropy of thermodynamics kicks in. If we would make an entropy calculation of the situation described above we would come to the conclusion that the entropy would have been increased. The Second Law forbids that the entropy can decrease for the universe as a whole. Every time that we transform (“use”) energy it comes automatically with an increase in entropy . The entropy level of a small system may indeed decrease, such as the entropy content of a pot of hot water as it is cooled. However, the total amount of entropy for the universe as a whole has increased as the heat energy went from the pot of water to the surroundings. Of course you could argue that we could catch all the evolved molecules from burning the fuel after we completed our car ride and restore them such that we get back to our original amount of fuel. Indeed that can be done (in principle at least) but in doing so you will need to transform even more energy (and thus produce even more entropy!). So we are trapped in this circle where we only can produce entropy and never be able to restore it.

Although we do not change at all the total amount of energy available to us, the Second Law forbids us from recycling the energy, so to speak. Energy is only useful to us when it is in a form that it is capable of doing work. This ability to do work is termed exergy. Another, more popular, way to put this is to say that the quality of the energy exergy degrades as work is done. Entropy can be used to determine the quality of energy. This we can summarize as:

High quality energy (capacity to do work) has a low entropy value

Low quality energy (no or less capacity to do work) has a high entropy value

To recap this: the Second Law teaches us that transforming energy always proceeds from a higher quality state to a lower quality state; another way of stating this is that the amount of exergy decreases. During the process of energy transformation there is always an inherent production of entropy that can never be reversed. There is a fundamental reason that the entropy only can increase and, in a way, makes our life miserable. It was Ludwig Boltzmann who around 1880 made a connection between entropy and the mechanical properties of molecules and atoms.

Around 1900 there was still a fierce debate going on between scientists whether atoms really existed or not. Boltzmann was convinced that they existed and realized that models that relied on atoms and molecules and their energy distribution and their speed and momentum, could be of great help to understand physical phenomena. Because atoms where supposed to be very small, even in relatively small systems one faces already a tremendous number of atoms. For example: one milliliter of water contains about 3x10²² atoms. Clearly it is impossible to track of each individual atom things like energy and velocity. Boltzmann introduced therefore a mathematical treatment using statistical mechanical methods to describe the properties of a given physical system (for example the relationship between temperature, pressure and volume of one liter of air). Boltzmann's idea behind statistical mechanics was to describe the properties of matter from the mechanical properties of atoms or molecules. In doing so, he was finally able to derive the [[Second Law] of Thermodynamics] around 1890 and showed the relationship between the atomic properties and the value of the entropy for a given system. It was Max Planck who based on Boltzmann results formulated of what was later called Boltzmann expression:

S = k ln(W)

Here is S the entropy, k is Boltzmann constant, ln is the natural logarithm and W is the amount of realization possibilities the system has. This last sentence typically encounters some problems when we try to understand this.

The value of W is basically a measure of how likely a system can exist given certain characteristics. Let me give an example. Imagine you have a deck of cards with 4 identical cards. The deck as a total can be described with parameters such as the number of cards, thickness of the deck, weight and so on. With four cards we have 4x3x2x1 = 24 possible configurations that all lead to the same (in terms of the parameters above) deck of cards. Therefore in this case W = 24. The Boltzmann constant, k, equals to 1.4 10-²³ J/K and the entropy S is then kln24 = 4.4 10 -²³ J/K. The more possibilities a given system has to establish itself, and with the many atoms we have in one gram of material, the more likely it will be that we will indeed observe that system and the higher the entropy will be. Now it is easier to understand the observation of Clausius that the entropy increases all the time. This is because a given (isolated) system will tend to become more disordered and thus more likely to occur. Unfortunately the more disorder a given system has the less useful such a system is from a human perspective. Energy is much more useful when it is captured in a liter of fuel than when that same amount of energy, after we burned the fuel, is distributed all over the environment! Clearly the entropy went up because the disorder after burning increased.

How entropy plays a role in the economic process and re-defines concepts such as efficiency and sustainability

Relationship between thermodynamics and economic processes

What is the “economic process?” Many definitions can be found, but here we would like to use the description by Nicolas Georgescu-Roegen in his seminal book, Entropy Law and the Economic Process: The main objective of the economic process is to ensure the long-term existence of the human species. Another way of putting it is to say that the output of the economic process is designed to maintain and increase the enjoyment of life. He defines three important partial economic processes: agriculture, mining, and industrial manufacturing.

Sadi Carnot, a young French military engineer, was one of the first scientists to apply science to industrial problems. He wanted to understand the efficiency of the steam engine (i.e., how much coal was needed to deliver an amount of work) and accomplished that by a painstakingly detailed analysis of the cyclic nature of the steam engine. Only after that analysis did it become clear that the efficiency was only dependent on the temperature difference between the steam in the boiler and the vapor in the condenser. This ended an earlier belief that the efficiency of the steam engine was not limited at all. We learned that of the available heat at high temperature, only a portion could be used to perform work, and that the rest of the heat was wasted at the lower temperature of the condenser, and so could not be used anymore to deliver work.

Thus, in applying heat to do work, we don’t actually consume energy (or heat, for that matter). Indeed, the total amount of energy stays exactly the same. It is the qualitative aspect of the energy that changes: it transforms from the available form (i.e., available to perform work) to the unavailable form (cannot be used anymore to perform work). The amount of exergy decreases as useful work is extracted. Now we come to the heart of the matter. In typical economic models, the economic process is pictured as cyclic. This image is triggered (among other reasons) because money, a not unimportant item in an economy, goes basically from one hand to another without ever disappearing. Without further knowledge, one sees some parallels between money and energy . As mentioned, energy stays constant throughout the entire economic process, so why would we be worried? But we know better; we know what the fallacy is. We also know that the entropy of an isolated system increases and that this increase is irreversible. Thus, all this boils down to the Entropy Law, that says that there is only one direction we can go on this planet and that there is no way back: in all our processes we degrade the quality of the energy that is available to us. Therefore, the assumption that the nature of the economic process is cyclic is a serious misjudgement. More about this in the sections that follow.

Example of an economic process and the Entropy Law

In this section we want to analyze in more detail how the connection between the economic process and the Second Law comes about. To do this analysis, we need of course a description of the economic process. Although (as mentioned above) all economic processes can be categorized as agriculture, mining, and manufacturing, we cannot possibly analyze them all because of the immense amount of detail involved. For that reason, we’ll concentrate on a single manufacturing example: the life cycle of glass. Why glass? Well, I’ve always been impressed and intrigued by the art of glassblowing. But there are more practical reasons, too. Glass plays a role in many aspects of everyday life – tableware, windows, glasses, television tubes, and so on. And although glass itself does not contaminate our environment, the glass-making process certainly does, as we will see. Finally, glass is one of the best examples of a material that can be recycled almost forever, but we will see that its recycling behavior is somewhat misleading as well. Most glass production occurs in two forms, glass containers and flat glass. For this analysis, we will consider only glass bottles.

The life of a glass bottle starts with the gathering of the raw material, called silica (a chemical compound of silicon and oxygen, SiO2) that can be found as quartz sand, or in the case of recycling, crushed glass bottles. Quartz sand is in fact already glass, but in the form of very fine grains. The quartz sand is fed into a furnace for melting; sometimes small amounts of other materials are added to change the properties of the glass (for instance, lead oxide to produce lead crystal glass, iron to make brown or green colors, and cobalt salts to produce green or blue tints).

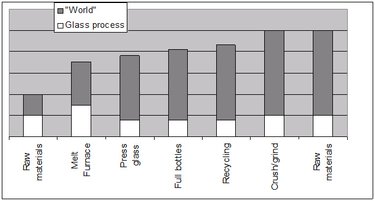

Now let’s go a step further and look at how the steps described in Figure 4 impact entropy in a qualitative sense (that is, the entropy increases or decreases). We’ll do this at the completion of each step, except for the raw materials step, since that is our starting point. Also, we will break down the entropy changes throughout the cycle for the glass components and for the impacted environment at all stages. We’ll call that environment the “World” and we’ll define the World’s boundaries so that outside them, no further impact of our manufacturing process can be detected. In other words, we’ll have an isolated system where we can see not only the entropy changes of the glass-making process, but also the impact of that process on its environment. We will arbitrarily assign an entropy to the raw materials and the World and estimate increases or decreases from that point onward.

The next step is the melting furnace, which can run as hot as 1700 ºC. The silicate molecules are now in liquid state, and we know from the Boltzmann formula that the entropy will increase, because we now have more microstates available for the silicate molecules. But what will happen with the entropy change in the universe? Well, we will need lots of energy to heat the furnace and keep it at high temperature. For that, we can burn fossil fuels, which will cause another entropy increase by producing large quantities of CO2 and consuming big chunks of energy. In fact, each ton of glass melted in the furnace will use about 4 giga-joules! Thus, we expect that the entropy will increase for the glass as well as for the universe as a whole. In Figure 5, we can see this as an increase in the entropy bars.

Once the glass is melted in the furnace, the next step is to pour gobs of the liquid glass into a mold, where the glass will be shaped by mechanical action or compressed air into its final form as bottles. A well controlled cool down step follows to ensure that all the stress in the bottle is relieved and no cracks occur. As the glass goes from liquid state to bottle form, the entropy will drop because the material is going to a less chaotic state. But with respect to the universe as a whole, we need energy to compress the glass into molds, which will increase the entropy. In addition, cooling down the glass will release heat to the surroundings, leading again to a net entropy increase. The entropy decrease of the bottles is less then the entropy increase in the universe, and so the net result will be, once again, an entropy increase of the entire isolated system.

The next step is to fill the bottles with products such as water, beer, sodas, medicine, and so on. (We do not expect any substantial entropy change from how these products will be used.) Let’s assume next that all the empty bottles will be recycled. Making a bottle out of recycled glass, which does not reduce the quality of the glass, saves about 30% on the energy bill – or enough per bottle to let a 100 watt bulb shine for an hour! Recycling also brings a 20% reduction in air pollution (Air pollution emissions). How about the entropy balance? Well, to do the recycling, people will have to drive to recycling centers to drop off their empty bottles, and trucks will have to transport the bottles to the glass factory. The entropy of the glass will not change that much, but spent fuel from the car and truck trips will increase the entropy of the universe and therefore the entropy of the entire isolated system. Thus the energy and pollutions gains noted above are at least counteracted.

In the glass factory, the bottles now must be crushed. We could argue that the entropy of the glass will increase a bit because the state of the crushed glass is more chaotic than that of the bottles. However, the entropy of the World will increase even more again because we will use electric power to run the crushing machine. To obtain electrical power we must burn more fossil fuel with an entropy increase, as indicated by the higher bar in Figure 5.

At this point we are back to the raw material for bottle production: the crushed glass will be mixed with silicate sand to feed the melt furnace, and the whole process will start again. Thinking only of the glass-making process, it looks like we have a closed cycle, as pictured in Figure 4. But when we examine Figure 5 from an entropy point of view, there is nothing like a closed cycle: the entropy has increased since we started production. We have to conclude that recycling glass bottles is not at all a cyclic process at all.

A plea for a redefinition of efficiency and sustainability

Efficiency and sustainability

The main point Nicolas Georgescu-Roegen made was that economists did not pay sufficient attention to the side effects of economic processes. Often, the situation was pictured such that there were no issues in obtaining world resources and that “better” technology eventually would solve all environmental problems. However, our analysis of the glass making process shows how wrong this worldview is. Yes, from a purely materials and theoretical point of view we can continue with glass recycling indefinitely (at least in theory), but we know now that from an entropy perspective, the process constantly increases the entropy of our world. Of course, the world’s entropy will increase as part of nature; but the point is that unchecked human industry will accelerate it at a much greater rate.

We have now established that the economic process transforms available energy and the world’s resources into a situation of high entropy, and at a much faster rate than natural processes would drive. (The increase in entropy is partly counteracted by a decrease of entropy caused by the solar radiation). This high entropy situation can be described as a continuous formation of waste by the economic process. This quite fundamental and mostly hidden mechanism is not recognized by society or mainstream economists. This deficiency has everything to do with the traditional definition of efficiency. Efficiency typically is seen as how much output a certain process can generate versus the amount of input required in terms of headcount, raw materials, or capital (among other resources). All effort is then focused on increasing output and decreasing input. This is called efficiency improvement but sometimes is also termed economic development. However, if we want to account for world resources, we must simultaneously consider how to decrease the amount of entropy production. Thus in our bottle-making process, that means considering not so much the entropy changes in the glass process itself, but also the entropy increase in the World. Given this approach, it would make sense to define something like an entropy efficiency, or the total amount of entropy produced per bottle. If we would incorporate that kind of efficiency into our general approach to improving processes, we would truly achieve a sustainable economy.

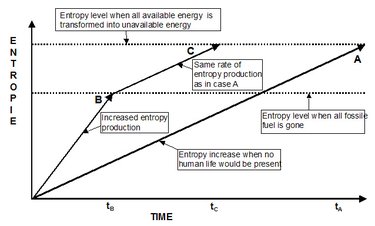

Let’s elaborate a little bit more on the issue of entropy production. We have seen that the entropy of an isolated system increases. We have also seen that ultimately the entropy will reach a maximum value and that everything will come to standstill. Of course, we hope that it will take quite some time before we reach that situation. The moment our economy comes to a standstill will be reached long before the entropy of the world assumes that maximum value. We also know that we need to transform energy every day to keep our economy going. But when all available energy has been transformed, there will be no human life (at least not as we know it today) left on earth and the rate of entropy production will slow down. In Figure 6, we have tried to capture the situation in a simplified version but it will do to make our point. What we see is how the entropy in the world develops with time.

Line A is our reference case. That is the rate of entropy production in the world if no human life (or any other “civilized” life form) is present. The entropy will steadily increase per the [[Second Law] of Thermodynamics], and will go on till we reach time tA, where finally all available energy has been transformed into unavailable energy. This will likely take hundreds of millions of years to get there.

In case B, represented by arrow B, there is human life on earth, including the entropy-inefficient economic processes described above. The rate of entropy production is now increased compared to case A, and will go on until we have transformed all fossilized energy and other natural resources on the planet. At that time, represented by tB, virtually no economic (industrial) activity is possible, with serious consequences for human life. It is clear that tB can be much shorter than tA. This shows that we leave much less time for future generations to be able to live on earth regardless of all the recycling we do! We know now that the entropy cannot be recycled. After tB, the entropy production will continue at a rate approximately the same as for case A, since no significant human activity will be present. The only difference is that time tC, where the maximum entropy is achieved, occurs in a much shorter time than in case A.

Despite the fact that the concept of entropy reveals some severe limitations of our current way of life, it is fair to say that the concept of entropy has never caught on in mainstream economics literature. One can find both publications in favor or against the use of entropy in economic analysis. The ideas of Georgescu-Roegen, however, did find a lot of supporters in the ecological-economic literature. Mainstream economists believe that there will be a technological solution for the energy and materials scarcity problem, despite the entropy arguments of the former group. And it is truly difficult to predict the future of technology – as when Western Union declared in 1876, “The telephone has too many shortcomings to be seriously considered as a means of communication. The device has no value.” Indeed, it has been widely proposed to convert the sun’s energy into electrical power by utilizing solar devices based on semiconductors. This is an option to which we should give more thought, since the sun is a nearly inexhaustible source of energy. The energy that is radiated by the sun onto the surface of the US is 50,000 Quads per year. Per year, the US transforms about 100 Quads of available energy into non-available energy. Clearly, on paper we have a tremendous potential, a real bonanza. However, we must make a few caveats here. First, the conversion efficiency of a solar cell is at best 15% (although technology progress could raise that number by a factor of two). So the potential 50,000 Quads are reduced to about 7000 available Quads. Second, we cannot (and don’t want to) use the entire surface of the US for solar cell panels, so let’s say we use only about 1% of the available land area. Now the 7000 available Quads diminish to about 70 accessible Quads. Even so, that figures is still comparable to the yearly US consumption of energy! Thus, solar energy could become a viable renewable power source that would make our society more sustainable.

Transformation of terrestrial resources from available to non-available

An adult human must transform an average of about 8000 kilojoules (kJ) per day to survive physically. However, if we include all the other energy sources used to produce cars, electricity, processed foods, and other products for everyday living, each of us transforms about 800,000 kJ a day! Since everything in nature, even matter, is in essence energy (through the laws of relativity) we should not be surprised that the [[Second Law] of Thermodynamics] also rules economic processes. There is no escape: every time we transform energy, the exergy level (the amount available to do work) decreases. The Second Law describes the amount that becomes unavailable.

Thus while the total amount of energy will remain constant, does the same hold true for materials? For instance, let’s consider iron. Iron is a stable element, which means that the total number of iron atoms on earth will not change. Iron is mined from areas rich in iron ores, and then through a proper process is liberated from its bonds with other elements such as oxygen. Then the iron is used in all kind of goods, from screws to automobile bodies to train tracks. After these products have served their purposes, it would seem possible to recycle 100% of the iron — but in fact this is not true at all. For instance, in the case of train tracks, every time a train runs over the tracks or brakes on them, it wears down the rails and throws iron atoms into the air. This is a process comparable to gas diffusion, where gas atoms or molecules always will try to occupy the maximum space available. (This is another instance of entropy increase.) Thus, a certain amount of productized iron cannot easily be recycled, and in fact is lost to the economic process. This loss is very comparable to the transformation of available energy to non-available energy.

Summary of entropy and economy

In the economics literature, one can find two opposing points of view: mainstream economists who believe that technological innovation will solve the degradation in quality of both energy and materials and that therefore growth can go on forever; and biophysical economists, who use the thermodynamic laws to argue that mainstream economists do not incorporate long-term sustainability in their models. For instance, the costs to repair the ozone hole or to mitigate increasing pollution are not accounted for in mainstream economic assessments. We saw how industrial and agricultural processes accelerate the entropy production in our world. Entropy production can only go on until we reach the point where all available energy is transformed into non-available energy. The faster we go toward this end, the less freedom we leave for future generations. If entropy production were included in all economic models, the efficiency of standard industrial processes would show quite different results.

Even if there were no humans on this planet, there would be continuous entropy production. So from that point of view the ecological system is not perfect, either; even the sun has a limited lifespan. The real problem for us is that, in our relentless effort to speed things up, we increase the entropy production process tremendously. In fact, you can see some similarity between economic systems and organisms: both take in low-entropy resources and produce high-entropy waste. This leaves fewer resources for future generations.

Although recycling will help a lot to slow down the depletion of the earth’s stocks of materials, it will only partly diminish the entropy production process. So whenever we design or develop economic or industrial processes, we should also have a look at the associated rate of entropy production compared to the natural “background” entropy production. We have seen that for reversible processes, the increase in entropy is always less than for irreversible processes. The practical translation of this is that high-speed processes always accelerate the rate of entropy production in the world. Going shopping on your bike is clearly a much better entropy choice than using your car. You could say that the entropy clock is ticking, and can only go forward!

Further Reading

- Etheridge, D.M., L.P. Steele, R.L. Langenfelds, R.J. Francey, J.-M Barnola, V.I. Morgan, 1996. Natural and anthropogenic changes in atmospheric CO2 over the last 1000 years from air in Antarctic ice and firn. Journal of Geophysical Research, 101(D2):4115-4128.

- Etheridge, D.M., L.P. Steele, R.L. Langenfelds, R.J. Francey, J.-M. Barnola and V.I. Morgan, 1998. Historical carbon dioxide records from the Law Dome DE08, DE08-2 and DSS ice cores. In Trends: A Compendium of Data on Global Change. Carbon Dioxide Information Analysis Center, Oak Ridge National Laboratory, Oak Ridge, Tenn, USA.

- Georgescu-Roegen, Nicolas, 1971. The Entropy Law and the Economic Process. Harvard University Press, Cambridge, Massachusetts.

- Intergovernmental Panel on Climate Change (IPCC), 2007. Fourth Action Report of the Working Group III.

- Mirowski, Philip, 1988. Against Mechanism, Protecting Economics from Science. Rowman & Littlefield Publishers, Lanham, MD. ISBN: 0847674363

- Rees, William E., 1996. Revisiting Carrying Capacity: Area-Based Indicators of Sustainability. Population and Environment: A Journal of Interdisciplinary Studies, 17(3).

- Rifkin, Jeremy, 1989. Entropy: Into the Greenhouse World. Bantam Books, New York. ISBN: 0553347179

- Schmitz , John E.J., 2007. The Second Law of Life. Energy, Technology and the Future of Earth As We Know It. William Andrew Publishing, Norwich, NY. ISBN: 0815515375 More information at [1].