Open modeling (Environmental & Earth Science)

Contents

- 1 Open modeling

- 1.1 Introduction The development of Open modeling, Internet-facilitated participatory environmental decision support models, holds significant promise for improving outcomes from the collaborative adaptive management of complex environmental systems. From the outset of the modern environmental movement in the 1970’s, major legislation such as the National Environmental Policy Act (Open modeling) (NEPA) and the Clean Water Act (CWA) simultaneously embraced two fundamentally different managerial approaches to achieving environmental sustainability: * “systems modeling”- extending the century-long advances associated with logical positivism and professionalized administration, this approach embraced the newly available "capabilities of computerized math models to generate science-based predictions regarding future states of complex environments based on the analysis of energy and matter transport and transformation in time and space; and * “strong democracy”- responding to the widespread distrust of large centralized governments, this approach shifted toward collaborative decision-making involving multiple actors and stakeholders made knowledgeable of the issues through information sharing and extended public discourse opportunities.

- 1.2 Adaptive management

- 1.3 Open Modeling

- 1.4 What’s Next?

- 1.5 Further Reading

Open modeling

Introduction The development of Open modeling, Internet-facilitated participatory environmental decision support models, holds significant promise for improving outcomes from the collaborative adaptive management of complex environmental systems. From the outset of the modern environmental movement in the 1970’s, major legislation such as the National Environmental Policy Act (Open modeling) (NEPA) and the Clean Water Act (CWA) simultaneously embraced two fundamentally different managerial approaches to achieving environmental sustainability: * “systems modeling”- extending the century-long advances associated with logical positivism and professionalized administration, this approach embraced the newly available "capabilities of computerized math models to generate science-based predictions regarding future states of complex environments based on the analysis of energy and matter transport and transformation in time and space; and * “strong democracy”- responding to the widespread distrust of large centralized governments, this approach shifted toward collaborative decision-making involving multiple actors and stakeholders made knowledgeable of the issues through information sharing and extended public discourse opportunities.

For the past three decades, the typical administrative implementation of these required approaches has been serial. First, technical analysts (often employed by project sponsors and working in a closed environment), develop the required predictive models and analyze a selected set of scenarios. Then, highly selected versions of the results of these studies are disseminated for public comment in contexts such as Draft Environmental Impact Statements. Then, elected/appointed decision makers select a course of action. The desired administrative outcome was efficient “one-stop” decision making. For example, the issuing of a 40-year operating permit to a major hydroelectric dam which will significantly affect a region’s ecosystems, indigenous peoples, agriculture, and industrial development.

Results of this weakly connected multi-stage sequence have been highly mixed. It is now widely accepted in the scientific community that due to their complexity and openness, (global warming, invasive species, oil prices, etc.), our ability to accurately predict long-range future states of the environment is quite limited.

The Comprehensive Everglades Restoration Plan is highly dependent on the results of dynamic regional hydrologic and ecological simulation models. Even though these models, and those that may eventually replace them are, and will continue to be relatively complex and sophisticated, they only crudely approximate what actually takes place in the Everglades…the likelihood of capturing all the processes occurring in a system as complex as the Everglades within simulation models is low, …hence those involved will have to take this inevitable uncertainty into account (Central and South Florida Project, 2002-, p. 3).

Such candor within technical groups mirrors the long history of widespread distrust among many interest groups and decision makers of the inherently closed black box, nature of systems modeling, and its frequent use by project advocates to rationalize their positions. Ultimately many important decisions have not been made in a timely fashion, or are made by the judiciary.

Adaptive management

In recent years Adaptive Management (AM) has emerged as a promising alternative to “one stop” decisions.

“No plan can anticipate fully the uncertainties that are inherent in predicting how a complex ecosystem will respond during restoration efforts…For these and many other reasons, the ways in which this ecosystem will respond to the recovery of more natural water patterns almost certainly will include some surprises. The recommended Comprehensive Plan anticipates such surprises and is designed to facilitate project modifications that take advantage of what is learned from system responses, both expected and unexpected, and from future restoration targets as those become more refined. (Central and South Florida Project, (1999), xiii).

AM has four central tenets:

- We frequently have to make major public decisions now which have uncertain long term outcomes;

- Systems modeling can provide guidance, but due to large degrees of inherent uncertainty decision makers will necessarily need to incorporate mid-range flexibility informed by monitoring and model evolution;

- Rather than one-stop, decisions (regarding both modeling and actions) will evolve as a punctuated cascade over extended time frames; and

- Because all such decisions involve distributing expected positive and negative effects among different stakeholders, collaborative decision makers with different levels of technical sophistication will necessarily need to understand the basis and limitations of the modeling; accept it as essentially unbiased; and have the facility to utilize the models in exploring scenarios of interest to their constituencies.

Open Modeling

Although better information and improved discourse will not necessarily lead to more sustainable environments, Internet-facilitated Open Modeling (OM) has the potential to significantly facilitate the transition from traditional decision making to AM. Figure 1 depicts the three key dimensions of OM.

Model Transparency

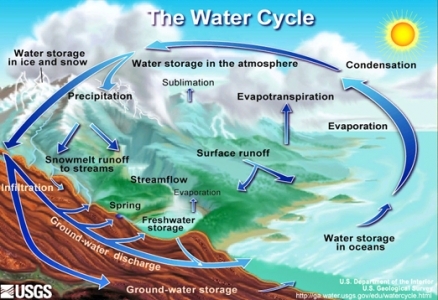

OM is designed to empower all actors and stakeholders, (not just the model developers and technical analysts), as a community of modeling end users. Model Transparency provides end users with a basic understanding of the model’s focus and logical structure. Moving beyond the current “black box”, pictorial flow charts can illustrate the major components and relationships of the modeled process, such as the hydrological cycle or acid rain. Models by definition are highly simplified versions of “reality”. In OM, all major simplifying assumptions are explicitly stated. An example would be the continuation of the recent trend of per-capita energy consumption. At the heart of environmental processes are spatial and temporal transport and transformation of matter and energy. Data availability and computational limitations necessarily involve the “chunking” of the environment in one/two/or three spatial dimensions, and in time. A lake model may utilize building blocks of assumed homogeneity within three depth zones, a grid of surface areas 10 miles square, and yearly analysis iterations. Technical end users will also need access to the underlying equations and data sets.

Information Quality Disclosure

Because of the uncertainty involved in all modeling, Information Quality Disclosure is essential to OM. Moving outward from the black box origin of Fig. 1, is the candid statement of significant figures for all quantitative estimates (including tables, graphs, and maps), a pre-digital professional practice which has essentially all but disappeared in recent years. Ideally models have been calibrated to fit local historical data, and in addition verified with a second independent data set. This is frequently not feasible. End users need to have a basic understanding of the ideal process, and the lineage of the actual modeling situation in which they are involved. Sensitivity analysis is a tool for individually identifying which variables appear to have the most effect on the model’s output predictions. It is frequently used in model development, and decisions regarding monitoring. In contrast, error propagation (using techniques such as monte-carlo analysis) provides a means of estimating the quality range of the model predictions based on the uncertainty of all model elements dynamically interacting. Full OM entails error propagation estimates of expected prediction ranges.

Participatory "What If?"

Finally, the Participatory "What If?" axis of Fig. 1 provides the key to Internet-facilitated OM. Here, end users representing different interests and technical capabilities can remotely interact with the models at their own convenience and in their own locations to explore the potential environmental and socio-economic expected outcomes of varying decision scenarios, including the ”null” do-nothing option. Unlike the Black Box’s pre-determined model construction and option alternatives, OM would provide the capacity to explore changing model assumptions, and parameters, as well as decision alternatives including “blending” of combinations and permutations of major alternatives. Essential to this new capability will be the development of a customizable end-user interface which will allow the selection from along a continuum of general to technical content formats, reflecting the multiplicity of involved publics.

What’s Next?

The transition from traditional closed “one-stop” environmental decisions to AM has been slow due in large part to the huge inertia of the current legacy administrative systems. Ad-hoc elements of the three dimensions of Internet-facilitated OM currently exist but there is a policy articulation vacuum regarding agency encouragement/requirement for its full development. In the U.S., information policies continue to focus primarily on e-government efficiency, for example, the use by some agencies of Internet posting for Draft Impact Statements, and the solicitation of e-comments. Agencies have funded numerous modeling studies aimed at either or scientists, or decision-makers, or public education, but to date no integrated approach has emerged. Major advances have taken place in Internet distribution of environmental data such as the U.S. Environmental Protection Agency's (EPA) data warehouse, and Interior’s National Map. However, data and information by itself is neither knowledge nor wisdom. If we would plot these efforts on Fig. 1 they would lie not far from the black box origin.

The fundamental policy need which would provide the catalyst for this transformation is to mandate the public disclosure of modeling predictive quality by requiring the posting of expected ranges. In 1978 the new NEPA Regulations for Impact Statements, required:

"Incomplete or Unavailable Information:

When an agency is evaluating reasonable foreseeable significant adverse effects on the human environment in an environmental impact statement and there is incomplete or unavailable information, the agency shall always make clear that such information is lacking. (40 C.F.R. 1502.22)"

"Methodology and Scientific Accuracy:

Agencies shall insure the professional integrity, including scientific integrity, of the discussions and analyses in environmental impact statements. They shall identify any such methodologies used and …may place discussion of methodology in an appendix. (40 C.F.R. 1502.24)"

These well intended generalities, which encompass all modeling, have been uniformly ignored by both the agencies, and the administratively responsible Council on Environmental Quality and the EPA in regard to requiring explicit estimates of error propagation in computer models.

Although most analysts agree that the origins of the recent U.S. Data Quality Act was pro-business/anti-environmental, it does have the potential for the White House Office of Management and Budget (OMB) to require all environmental models to include error estimates: “(a) The Director of O.M.B. shall… provide guidance to federal agencies for ensuring and maximizing the quality, objectivity, utility, and integrity of information (including statistical information) disseminated by federal agencies…”

To date, both OMB and the agencies have emphasized the use of elite appointed scientific review panels and essentially ignored quality range estimates except for human health risk assessments.

Further Reading

- Central and South Florida Project, 1999. Final Integrated Feasibility Report and Programmatic Environmental Impact Statement.

- Central and South Florida Project, 2002. Comprehensive Everglades Restoration Plan: Model Uncertainty Workshop Report.

- U.S. Council on Environmental Quality, 1978. National Environmental Policy Act Regulations, 40 C.F.R. 1500 et seq.

- Lee, Kai, 1993. Compass and Gyroscope: Integrating Science and Politics for the Environment. Wash. D.C.: Island Press. ISBN: 1559631988

- Sarewitz, D., et al, eds., 2000. Prediction: Science, Decision Making, and the Future of Nature. Wash. D.C.: Island Press. ISBN: 1559637765

- U.S. Data Quality Act. Sec. 515 of the General Appropriations Act for Fiscal Year 2002 (P.L. 106-554).